import cv2

import numpy as np

import onnxruntime as ort

import yaml

import hashlib

def name_to_color(name):

"""根据类名生成固定的颜色。"""

hash_str = hashlib.md5(name.encode('utf-8')).hexdigest()

r = int(hash_str[0:2], 16)

g = int(hash_str[2:4], 16)

b = int(hash_str[4:6], 16)

return (r, g, b)

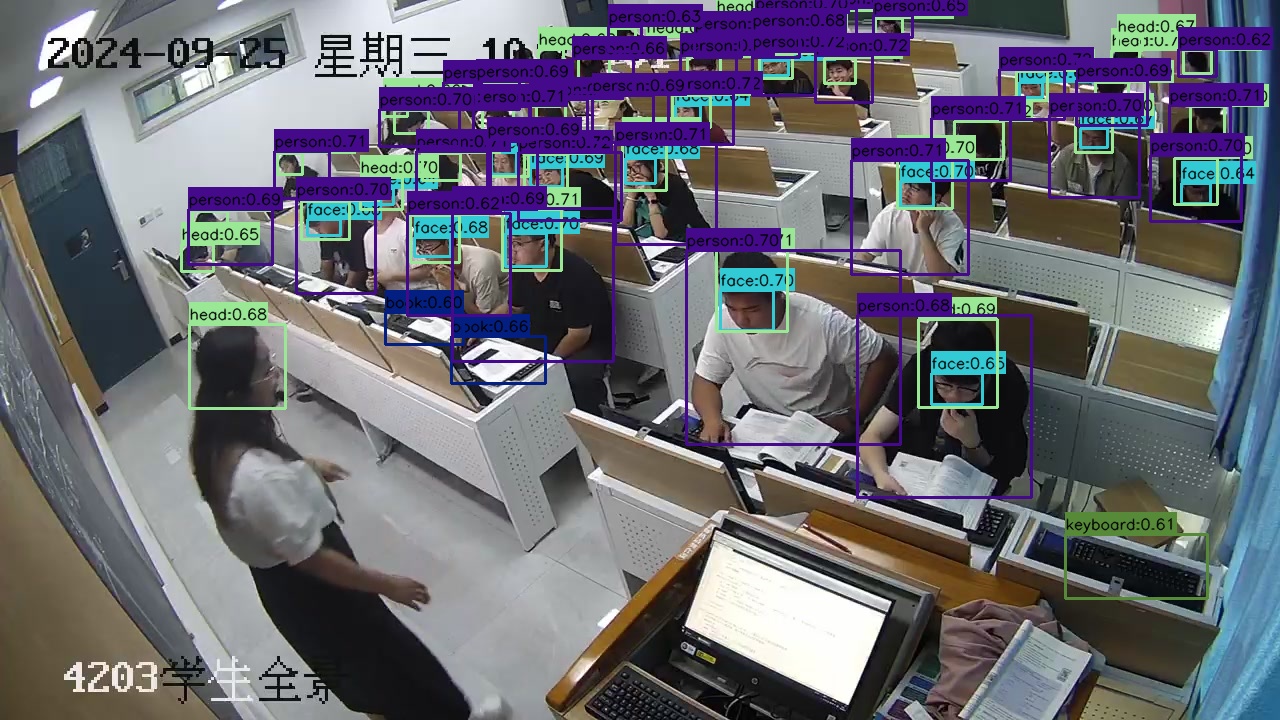

CLASSES = [

'book', 'bottle', 'cellphone', 'drink', 'eat', 'face',

'food', 'head', 'keyboard', 'mask', 'person', 'talk'

]

def sigmoid(x):

"""Sigmoid激活函数。"""

return 1 / (1 + np.exp(-x))

def xywh2xyxy(x):

"""

将 (x, y, w, h) 转换为 (x1, y1, x2, y2)

"""

y = np.copy(x)

y[..., 0] = x[..., 0] - x[..., 2] / 2

y[..., 1] = x[..., 1] - x[..., 3] / 2

y[..., 2] = x[..., 0] + x[..., 2] / 2

y[..., 3] = x[..., 1] + x[..., 3] / 2

return y

def compute_iou(box, boxes):

"""

计算单个box与多个boxes的IoU

box: (4,) -> (x1, y1, x2, y2)

boxes: (N, 4)

"""

xmin = np.maximum(box[0], boxes[:, 0])

ymin = np.maximum(box[1], boxes[:, 1])

xmax = np.minimum(box[2], boxes[:, 2])

ymax = np.minimum(box[3], boxes[:, 3])

inter_w = np.maximum(0, xmax - xmin)

inter_h = np.maximum(0, ymax - ymin)

intersection = inter_w * inter_h

box_area = (box[2] - box[0]) * (box[3] - box[1])

boxes_area = (boxes[:,2] - boxes[:,0]) * (boxes[:,3] - boxes[:,1])

union = box_area + boxes_area - intersection

iou = intersection / union

return iou

def load_model(model_path, providers=['CPUExecutionProvider']):

"""

加载ONNX模型

"""

session = ort.InferenceSession(model_path, providers=providers)

input_names = [inp.name for inp in session.get_inputs()]

output_names = [out.name for out in session.get_outputs()]

input_shape = session.get_inputs()[0].shape

return session, input_names, output_names, input_shape

def preprocess_image(image_path, input_width, input_height):

"""

读取并预处理图像

"""

image = cv2.imread(image_path)

if image is None:

raise FileNotFoundError(f"图像未找到: {image_path}")

original_height, original_width = image.shape[:2]

image_rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

resized = cv2.resize(image_rgb, (input_width, input_height))

input_image = resized.astype(np.float32) / 255.0

input_image = input_image.transpose(2, 0, 1)

input_tensor = np.expand_dims(input_image, axis=0)

return image, input_tensor, original_width, original_height

def postprocess(outputs, original_width, original_height, input_width, input_height, conf_threshold=0.7, iou_threshold=0.5):

"""

后处理步骤,按类别应用NMS

"""

output = outputs[0]

predictions = np.squeeze(output, axis=0).T

print(f"总预测数量: {predictions.shape[0]}")

boxes = predictions[:, :4]

class_scores = sigmoid(predictions[:, 4:])

class_ids = np.argmax(class_scores, axis=1)

confidences = np.max(class_scores, axis=1)

mask = confidences > conf_threshold

boxes = boxes[mask]

confidences = confidences[mask]

class_ids = class_ids[mask]

print(f"应用置信度阈值后: {boxes.shape[0]} 个框")

print(f"置信度分布: 最小={confidences.min():.4f}, 最大={confidences.max():.4f}, 平均={confidences.mean():.4f}")

if len(boxes) == 0:

return [], [], []

boxes_xyxy = xywh2xyxy(boxes)

scale_w = original_width / input_width

scale_h = original_height / input_height

boxes_xyxy[:, [0, 2]] *= scale_w

boxes_xyxy[:, [1, 3]] *= scale_h

boxes_xyxy = boxes_xyxy.astype(np.int32)

boxes_list = boxes_xyxy.tolist()

scores_list = confidences.tolist()

final_boxes = []

final_confidences = []

final_class_ids = []

unique_classes = np.unique(class_ids)

for cls in unique_classes:

cls_mask = class_ids == cls

cls_boxes = [boxes_list[i] for i in range(len(class_ids)) if cls_mask[i]]

cls_scores = [scores_list[i] for i in range(len(class_ids)) if cls_mask[i]]

if len(cls_boxes) == 0:

continue

cls_boxes_xywh = []

for box in cls_boxes:

x1, y1, x2, y2 = box

cls_boxes_xywh.append([x1, y1, x2 - x1, y2 - y1])

indices = cv2.dnn.NMSBoxes(cls_boxes_xywh, cls_scores, conf_threshold, iou_threshold)

if len(indices) > 0:

for i in indices.flatten():

final_boxes.append(cls_boxes[i])

final_confidences.append(cls_scores[i])

final_class_ids.append(cls)

print(f"应用NMS后: {len(final_boxes)} 个框")

return final_boxes, final_confidences, final_class_ids

def visualize(image, boxes, confidences, class_ids, output_path='result.jpg'):

"""

在图像上绘制检测结果

"""

image_draw = image.copy()

for (bbox, score, cls_id) in zip(boxes, confidences, class_ids):

x1, y1, x2, y2 = bbox

cls_name = CLASSES[cls_id]

label = f"{cls_name}:{score:.2f}"

color = name_to_color(cls_name)

cv2.rectangle(image_draw, (x1, y1), (x2, y2), color, 2)

(label_width, label_height), _ = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)

cv2.rectangle(image_draw, (x1, y1 - label_height - 10), (x1 + label_width, y1), color, -1)

cv2.putText(image_draw, label, (x1, y1 - 5),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0,0,0), 1, cv2.LINE_AA)

cv2.imwrite(output_path, image_draw)

print(f"推理完成,结果已保存为 {output_path}")

def predict(model_path, image_path, output_image_file, conf_threshold=0.6, iou_threshold=0.5):

session, input_names, output_names, input_shape = load_model(model_path, providers=['CPUExecutionProvider'])

_, _, input_height, input_width = input_shape

image, input_tensor, original_width, original_height = preprocess_image(image_path, input_width, input_height)

outputs = session.run(output_names, {input_names[0]: input_tensor})

boxes, confidences, class_ids = postprocess(

outputs,

original_width=original_width,

original_height=original_height,

input_width=input_width,

input_height=input_height,

conf_threshold=conf_threshold,

iou_threshold=iou_threshold

)

if len(boxes) == 0:

print("未检测到任何目标。")

return

visualize(image, boxes, confidences, class_ids, output_path=output_image_file)

if __name__ == "__main__":

model_path = 'classroom_obd.onnx'

image_path = '002899.jpg'

output_image_file = "onnxruntime_result.jpg"

predict(model_path, image_path, output_image_file)